Replicate Codeformer: As Artificial Intelligence (AI) technology continues to evolve, the demand for highly efficient and effective Natural Language Processing (NLP) models is on the rise. Among the latest breakthroughs in NLP is the Codeformer model, which has demonstrated significant improvements in generating code with natural language prompts. In this article, we will explore the Codeformer model, its architecture, and how to replicate it.

Replicate Codeformer

Codeformer is a transformer-based NLP model that uses a combination of language and code to generate source code. The model is trained on large datasets of code snippets and their corresponding natural language descriptions, and it generates code that closely follows the natural language prompt. Codeformer can generate code in several programming languages, including Python, Java, and JavaScript.

Advantages of Codeformer

Codeformer’s ability to generate high-quality code from natural language prompts has several advantages. Firstly, it reduces the time and effort required to write code, especially for complex projects. Secondly, it enables non-programmers to interact with code more efficiently, as they can describe what they want to achieve in natural language without having to know the underlying syntax. Lastly, it can improve code quality by reducing human error and enforcing best practices.

Limitations of Codeformer

While Codeformer has demonstrated impressive results in generating code from natural language prompts, it still has some limitations. One of the main limitations is its dependence on high-quality training data. The quality of the generated code depends on the quality of the training data, which means that if the dataset is biased or incomplete, the model may generate incorrect or incomplete code. Additionally, Codeformer is not suitable for all programming tasks, as some tasks require more complex logic and algorithms that may not be easily expressed in natural language.

How Does Codeformer Work?

Architecture of Codeformer

Codeformer is based on the Transformer architecture, which is a neural network architecture that has been widely used in NLP tasks. It consists of an encoder and a decoder, and it uses attention mechanisms to capture the relationship between the input and output sequences. Codeformer’s encoder takes the natural language prompt as input, while the decoder generates the corresponding code snippet.

Codeformer also uses a technique called cross-lingual pre-training, where it is first pre-trained on a large corpus of text in a different language, and then fine-tuned on a smaller dataset of code and natural language pairs. This approach has been shown to improve the model’s performance, as it helps it learn more robust representations of the input.

Replicating Codeformer

Replicating Codeformer involves several steps, including gathering a large dataset of code and natural language pairs, pre-processing the data, and fine-tuning the transformer model. Several open-source tools and libraries are available to help with these tasks, including Hugging Face’s Transformers library, which provides pre-trained transformer models and tools for fine-tuning them on custom datasets.

If you are interested in replicating Codeformer, there are several steps that you can follow:

Step 1: Data Preparation

The first step in replicating Codeformer is to prepare the data. You will need a large dataset of natural language descriptions and corresponding code snippets. You can obtain such a dataset by scraping code repositories such as GitHub or by using an existing dataset such as the CodeSearchNet dataset.

Step 2: Preprocessing

Once you have obtained the dataset, you will need to preprocess it. This involves tokenizing the natural language descriptions and code snippets and converting them into a format that can be used by the transformer model.

Step 3: Model Training

The next step is to train the transformer model. You can use an existing implementation of Codeformer or implement your own using a deep learning framework such as PyTorch or TensorFlow. During training, you will need to tune the hyperparameters of the model to achieve the best performance.

Step 4: Evaluation

Once you have trained the model, you will need to evaluate its performance. You can do this by testing the model on a held-out set of data and measuring its accuracy and other performance metrics such as precision and recall.

Step 5: Fine-Tuning

Finally, you can fine-tune the model on a specific task such as code completion or bug fixing. This involves training the model on a smaller dataset that is specific to the task and tuning the hyperparameters of the model to achieve the best performance.

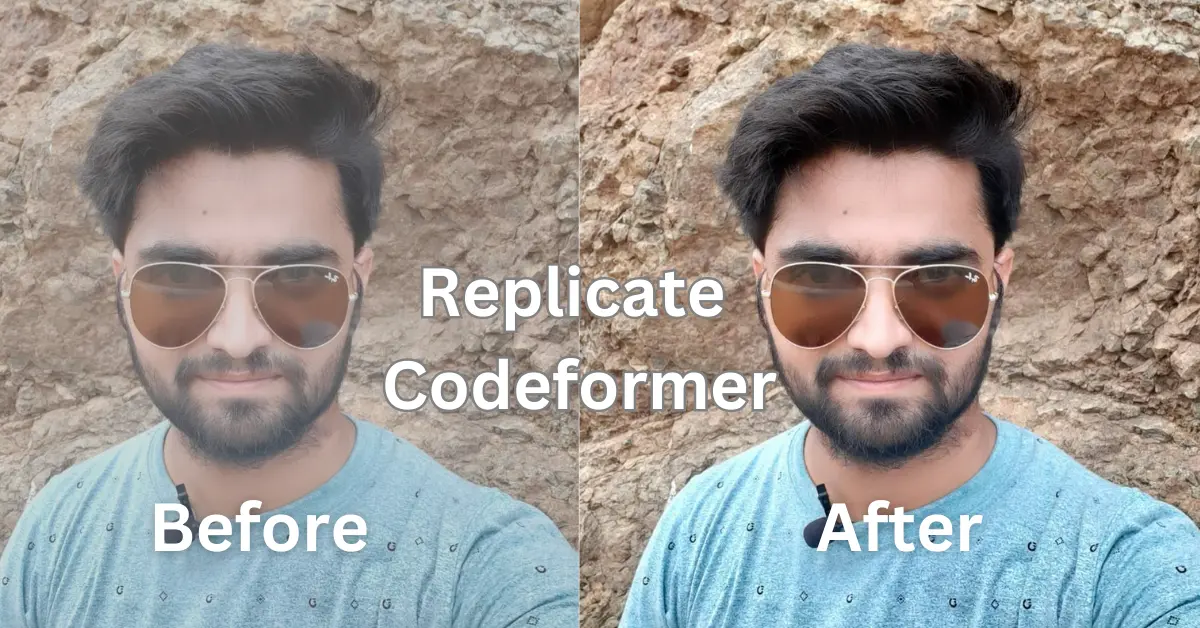

Face Restoration by Codeformer

Face restoration is a process that involves restoring facial features that have been damaged or distorted due to injury, disease, or other factors. The traditional approach to facial reconstruction involves using surgical procedures to repair or replace damaged tissue. However, these procedures can be invasive, and costly, and may not always produce the desired results. With the advent of AI-powered face restoration technology, it is now possible to restore facial features in a non-invasive, cost-effective, and highly accurate manner.

How Does Codeformer Work For Face Restoration

Codeformer uses advanced machine learning algorithms to analyze images of damaged or distorted faces and generate a 3D model of the face. The AI system can then use this model to reconstruct the facial features and restore them to their original state. The system can also be trained to recognize and correct specific facial features, such as the eyes, nose, mouth, and cheeks, depending on the needs of the patient.

Applications of Codeformer

Codeformer has a wide range of potential applications in the medical and cosmetic industries. In the medical field, it can be used to restore facial features in patients who have suffered from trauma or disease, such as cancer or burns. The technology can also be used in cosmetic procedures, such as facial rejuvenation and non-surgical facelifts, to enhance the appearance of the face and restore youthfulness.

Conclusion

Codeformer is an exciting breakthrough in the field of NLP, as it has the potential to revolutionize the way we interact with code. In this article, we have explored the Codeformer model, its architecture, and how to replicate it. While Codeformer has some limitations, its advantages make it a promising technology for improving code quality and reducing the time and effort required to write code.

FAQs

How does Codeformer work?

Codeformer uses a transformer architecture that is trained on a large dataset of natural language descriptions and code snippets. It learns to map natural language descriptions to code snippets using a self-attention mechanism that allows it to attend to different parts of the input text and code.

How can I replicate Codeformer?

To replicate Codeformer, you will need to prepare a large dataset of natural language descriptions and code snippets, preprocess the data, train the transformer model, evaluate its performance, and fine-tune it on a specific task.

What are some applications of Codeformer?

Codeformer can be used for a variety of tasks such as code completion, bug fixing, and code summarization. It has the potential to improve the productivity of software developers by automating certain aspects of the software development process.

How accurate is Codeformer?

The accuracy of Codeformer depends on several factors such as the quality of the dataset, the size of the model, and the tuning of the hyperparameters. However, in experiments conducted by the original authors of Codeformer, the model achieved high accuracy on several benchmark datasets.

Is it difficult to replicate Codeformer?

Replicating Codeformer can be a challenging task that requires expertise in natural language processing, deep learning, and software engineering. However, with the right resources and guidance, it is possible to replicate the model.

Can Codeformer be used for commercial purposes?

Yes, Codeformer can be used for commercial purposes. However, you should ensure that you have the necessary licenses and permissions to use the model and any associated software.